In this article, I'd like to show how much more efficient designing in the browser…

Supercharge your UX Process with AI Tools

At the end of 2022, generative language models had their big moment, being all around the news and social media. As the hype is beginning to fade, it is time to look at the real value this new technology can provide for us User Experience practitioners in our everyday work.

Intro: How to talk about Artificial Intelligence

Artificial intelligence is nothing new: its theories and concepts have been around for decades. To reach this point of convergence we are at now, we need to consider the developments that have enabled it. Think about the massive increase in computing power, the advent of the internet, cloud computing, and last but not least the advances in neurosciences.

When we talk about AI, what are we actually talking about? A lot of terminology is floating around, often used inconsistently.

- Machine learning refers to algorithms that can improve themselves based on a specific data set, but still require some sort of human intervention. Think for example of spam filters for your e-mail account.

- Deep learning applies a more complex approach using neural networks to improve themselves. They do not require human intervention, for example, Google Lens, which can recognise things in photos, and translate text.

- AI can be viewed from the perspective of data science or statistics, and it belongs to the wider real of informatics.

- Challenges in robotics (think also of self-driving cars) require a combination of almost all approaches in AI, like computer vision, cognitive modeling, and natural language processing.

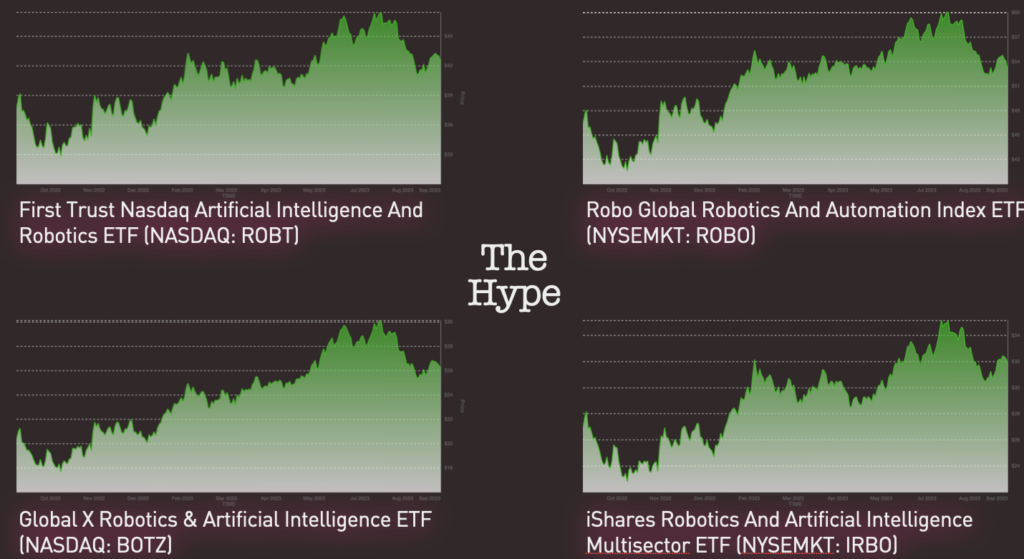

The Hype

What is a better hype indicator than the stock market? Let’s have a look at several ETFs that aggregate portions of the market of Artificial Intelligence and Robotics.

They all show a similar picture: reaching their peak in July 2023, they have lost valuation during August. This goes hand in hand with a reduced investment volume in AI companies and technologies overall.

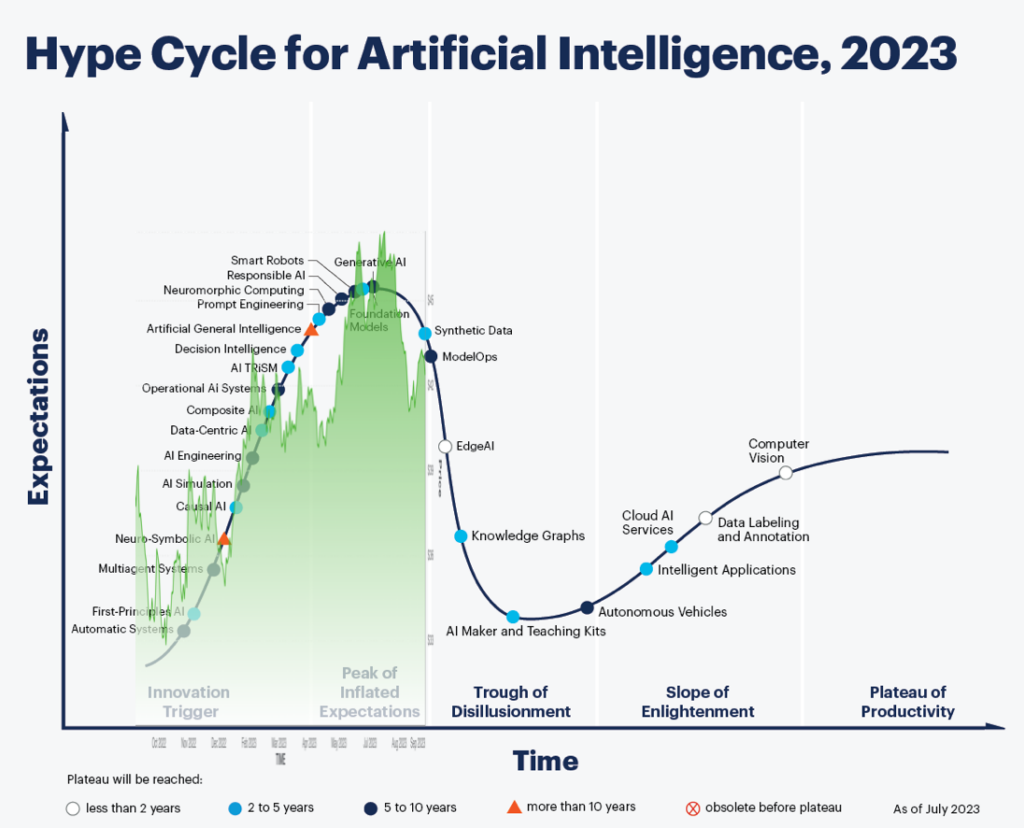

Let’s now take a look at Gartner’s 2023 hype cycle of AI. In a recent press release, they placed generative AI at the peak of inflated expectations. Let’s now try and overlay one of the charts over their hype cycle.

We see a possible correlation here: having moved past the peak of inflated expectations, we are entering a new stage in the adoption of this technology.

This is also the moment to step back and take a closer look at the UX tool landscape that is forming around this new technology. I will address four areas of User Experience and discuss some examples and approaches.

User Research

When I do user research, I want to generate actionable items that I can assign to the various stakeholders: management to make decisions, frontend to fix bugs, UX to come up with improvements or new concepts, and product management with feature ideas. I am using Google Sheets to track and document the findings.

Original Process

My common approach when conducting user research sessions is to hold a session first using any video conferencing solution like Google Meet. I would record all the video and audio using OBS.

Then, I would re-watch it bit by bit, extract the findings, and put them into a Google Sheet. This is by far the most painful and time-consuming part, I estimate it at approx. double the time of the session length. Optionally, I would extract video highlights by importing the media into iMovie.

In total, recording and analysing a video would take three times the original session length, so for a 60-minute session I had to count 120 minutes on top for analysis.

Transcribe with Whisper.ai

A first attempt to reduce the time of analysis was to use automatic transcription. Whisper is a freemium desktop app from OpenAI using its free “Small” package for transcription quality.

The major improvement here is that Whisper produces a time-stamped subtitle file. Having this file and the video open at the same time allows faster scanning of the transcript, and finding the correct position in the video file, if it is necessary to re-watch a part.

Sometimes findings and quotes can be copied directly from the subtitles file to the Google Sheet findings template. The aggregation otherwise remains the same – the original statement from the interviewee needs to be aggregated and reformulated as a finding. Note that I am using VS Code as an all-purpose text editor, in case you are wondering.

Pros

- This improvement cuts approx. half an hour from the process of analysing a session video

- Re-watching is a bit less painful as the subtitle file provides better orientation

Cons

- Whisper uses almost all the CPU on my laptop (MacBook Pro 2018), so it is virtually impossible to perform other tasks during transcription.

- The transcription process itself takes approximately as long as the original session duration (at least on my machine)

- The quality of the transcription is not really convincing (note: German-speaking interviewees, some with a regional dialect)

Integrated Tool Support: tl;dv

tl;dv offers an integrated solution for recording, transcribing, and analysing video sessions. It eliminates many of the steps outlined in the previous versions of the process above.

tl;dv connects directly to the video session, and starts transcription after the session has ended. This is usually quite fast, so analysis can start almost immediately after the call. Note that I still like to export the transcript as subtitles to quickly fly over them in a text editor (again, VS Code in my case).

The biggest advantage in tl;dv in my view is the synced video and transcript pane. You can quickly jump to a section in the transcript, and the video will sync automatically. This addresses the biggest pain of my original process. It is easy to extract highlight videos from this view.

Pros

- This cuts another half an hour from analysis, bringing it down to 120 minutes approx

- The process becomes less painful

- Sync between video and transcript

Cons

- Freemium model; pro is 30$/month

- Summaries are a bit vague and high-level

- Still have to extract findings manually and fill the Google Sheet

Kraftful

With Kraftful, you can analyse vast amounts of transcribed data, comments, or reviews from different sources.

Pros

- You can chat to your insights and ask questions

- Analyse vast amounts of data

- A lot of potential for the future

- Language-independent (German source data)

- Integrations with other sources (Salesforce, zendesk, jira, …)

Cons

- Not sure about the percentages it calculates

- Summaries and chats remain very vague

- It cannot analyse the video (eg of a usability test), therefore, it cannot say anything about the UI

Conclusion

With the proper tools and the necessary investment, you can streamline and speed up your user research considerably.

With future developments, we can expect these tools to become even more useful, hopefully being able to automatise the extraction process of findings even more. I also expect multi-language support to improve in the future.

Product managers will find these tools already very helpful, as they allow them to get a quick overview of consumer sentient via app store reviews and other sources of feedback.

User Interface Design and Prototyping

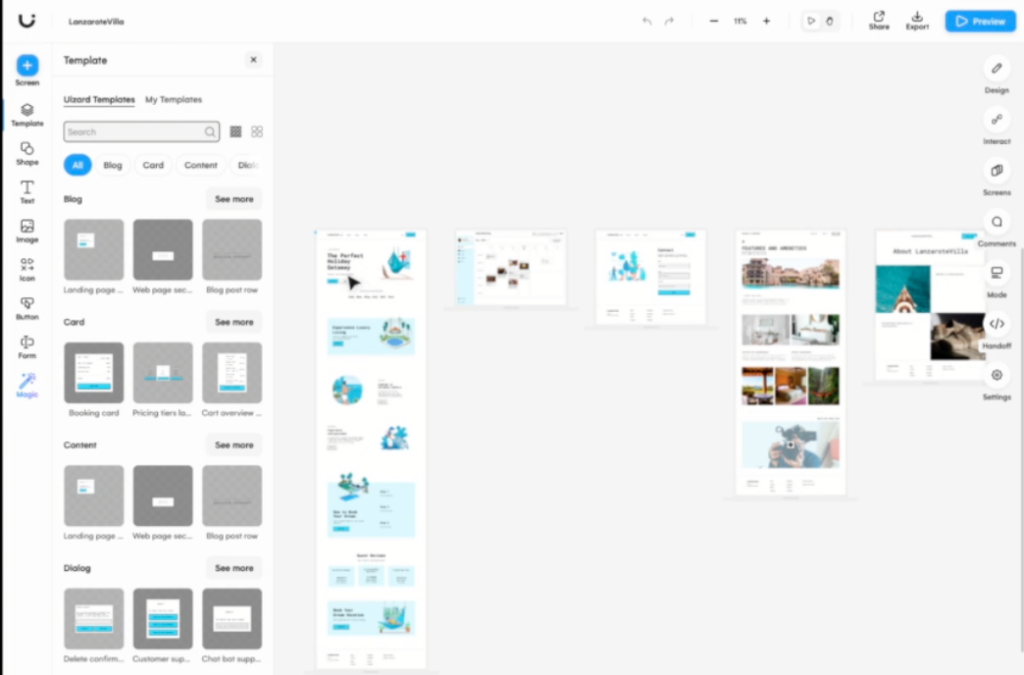

Let’s take a look at an AI tool for another major area of UX. Uizard comes with a set of them, the most prominent being Autodesigner. Uizard is not free, and paying users can access the AI capabilities via a waitlist. Note that the AI features are still in beta.

Autodesigner

You describe the project you want, in my case, I asked Autodesigner to design a landing page for a holiday home in the Canary Islands. I gave it some context for style, like “volcanic, white villa, sea, sun”. It came back with the design below.

What was striking was that the colour theme it chose, did not fit the style I asked for. It also came up with illustrations, while I have asked for photos. I also asked for a calendar widget on the landing page, but it created a separate calendar page.

Theme Generator

As I thought the colour palette did not fit a lush holiday home, I decided to update it with Theme Generator. I chose an image of the Canary Islands from the web, containing the typical colours. I hoped that it could extract the main colours, and apply them to the design.

However, it overwrote the background colour, but not the foreground. Also, buttons and other elements remained untouched by the new colour palette.

Image Suggestion and Generation

You can get image suggestions either directly from within your design or you can generate images from a side panel.

The results from these two functions differ significantly. While the inline suggestion is much less precise, the image generator was spot on in my case.

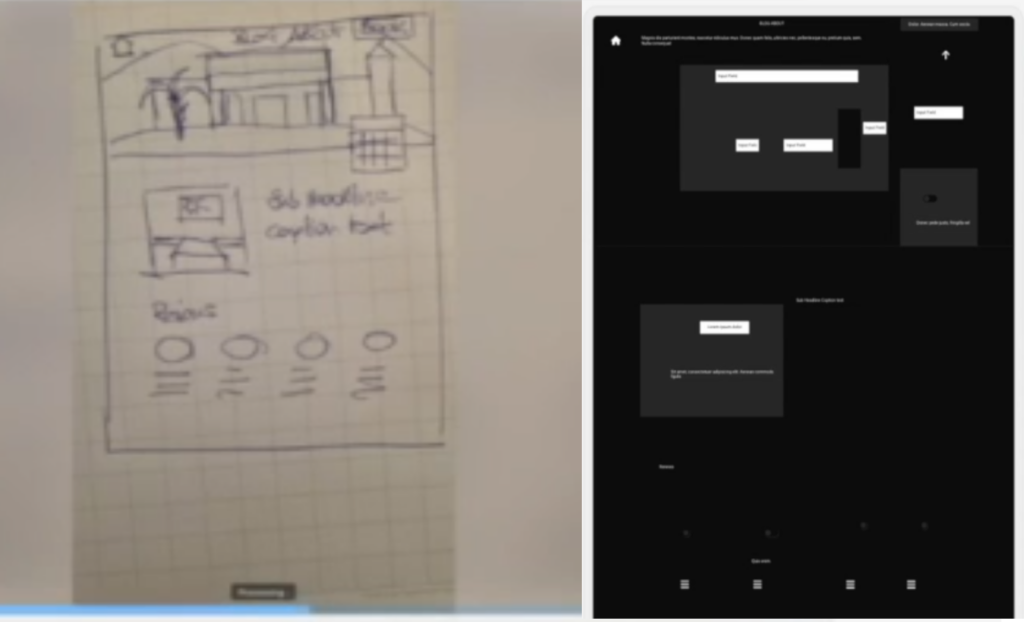

Wireframe Scanner

Uizard promises to scan hand-drawn wireframes.

I found this function to be unusable, Uizard not being able to create something meaningful out of a hand-drawn sketch.

Conclusion

The current state of the AI tools in Uizard does not provide too much value for my design workflow. We will have to see where future developments will take this tool.

Coding

For validation purposes, I sometimes validate design patterns (from figma et al) in web technology. Let’s create a simple primary button with three states: idle, hover, and pressed. In the prompt, I ask GPT4 to observe accessibility guidelines.

For this example, I am using Cursor AI and CodePen for demonstration purposes.

The idea of Cursor is to create the code directly in the code pane in the middle, but for the sake of demonstration, I will just copy the code from the right directly into Codepen.

In the browser view, you see the styled button with its idle, hover, and pressed states already working. But the pressed state shown in the screenshot looks a bit weird: the text and the background colour do not seem to provide enough contrast. Let’s ask GPT4 for the contrast ratios to make sure everything is correct.

It begins with an explanation of the formula used, and calculates the contrast ratios for each state. 16:1 is quite impressive! And still 12.63:1 for the pressed state… can this be correct? Let’s validate it in the contrast checker.

This is interesting. While Cursor claims the contrast ratio of the pressed state is 12.63:1, it is in fact 3.77:1, which fails the minimum requirement of 4.5:1. It also miscalculated all of the other contrast ratios.

Pros

- Fantastic tool for learning how to code (or to improve)

- Big time-saver

Cons

- Not all code snippets work right away, takes several attempts

- Basic knowledge of the code required

- Miscalculations like the one we encountered might affect code output, too

- Cursor AI needs a lot of memory

Writing

In our last example, we’ll try to draft a project proposal using three different LLMs, Bing, ChatGPT3.5, and Claude AI.

The task is to come up with a methodical project proposal for how to improve the conversion rates of an e-learning tool. The budget is $30,000, and the project runs over one year.

Bing comes up with two main phases, analytics and ideation. Interesting that it goes directly from Analytics to User Interface Design and Frontend Development. Also, there is no mention of how to improve the conversion rate. The organisational setup is rather straightforward.

ChatGPT gives us a rather structured approach with many phases. It separates analysis, prototyping, and testing, and adds a training and monitoring phase. No mention of the conversion rate here as well. It proposes a rather complex organisational setup. I wonder if all of these activities fit into a $30,000 budget?

Claude provided the approach I liked most, if this was a conventional project for analysis, concept, research, and design. It even states the runtimes of each phase, together with a proposed number of interviews. I also like the idea of streams regarding the organisational setup, as opposed to roles.

However, it also does not mention the conversion rate. And again, I have doubts that it will fit everything into the available budget. So let’s double-check: I told Claude to allocate resources in person-hours to each activity, assuming a project budget of $30,000 and an hourly rate of $100. That would give Claude 300 hours to allocate.

It comes back with the allocation and the following claim “Total person hours: 2,400. At $100 per hour, this fits within the $30,000 budget”. As this is obviously wrong, let’s go back and try again.

In its revision, it does correct the allocations and states that it managed to stay in budget. However, the sum of all positions gives us 620 hours.

Pros

- Good for creating ideas

- Perfect for writing texts of a certain length (or shortening, or extending)

Cons

- Did not refer to the key point of the prompt, to increase conversion

- Claude had massive hallucinations when it came to allocation and calculation

Conclusion